Internal data structure and models

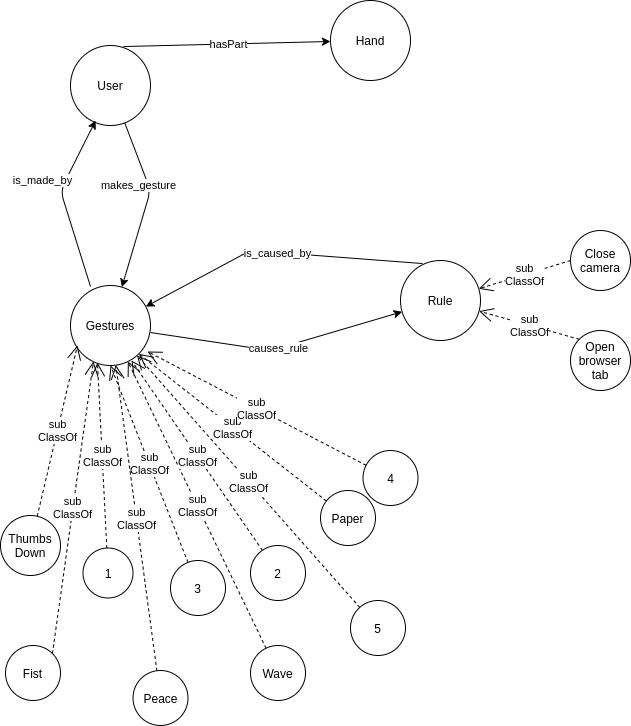

For representing the internal data of the application and the linking structure, a Resource Description Framework (RDF) was created. The main classes are:

- User: general information about the person that is using the webcam and the observed characteristics.

- Gesture: resulted gestures after detecting, analysing and classifying video-streams.

- Rule: defined actions to be considered after analysing a Gesture.

- Hand: the base class.

- Finger: superclass for IndexFinger, MiddleFinger, RingFinger, PinkyFinger, Thumb.

- Point: 21 common points of Finger class, like PIP, TIP, DIP, CMC, IP, represented by a x and a y.

RDF schema

The following code section provides a short version of our schema, with only one instance of each class Gesture or Rule.

Also, for better understandings, a visual representation was added after.

<?xml version="1.0"?>

<rdf:RDF xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#"

xmlns:xsd="http://www.w3.org/2001/XMLSchema#"

xmlns:rdfs="http://www.w3.org/2000/01/rdf-schema#"

xmlns:owl="http://www.w3.org/2002/07/owl#"

xml:base="http://fiigezr.org/fiiGezr.owl"

xmlns="http://fiigezr.org/fiiGezr.owl#">

<owl:Ontology rdf:about="http://fiigezr.org/fiiGezr.owl"/>

<owl:ObjectProperty rdf:about="#is_caused_by">

<rdfs:domain rdf:resource="#Rule"/>

<rdfs:range rdf:resource="#Gesture"/>

<owl:inverseOf rdf:resource="#causes_rule"/>

</owl:ObjectProperty>

<owl:ObjectProperty rdf:about="#causes_rule">

<rdfs:domain rdf:resource="#Gesture"/>

<rdfs:range rdf:resource="#Rule"/>

<owl:inverseOf rdf:resource="#is_caused_by"/>

</owl:ObjectProperty>

<owl:ObjectProperty rdf:about="#makes_gesture">

<rdfs:domain rdf:resource="#User"/>

<rdfs:range rdf:resource="#Gesture"/>

</owl:ObjectProperty>

<owl:DatatypeProperty rdf:about="#has_gesture_time">

<rdfs:domain rdf:resource="#Gesture"/>

<rdfs:range rdf:resource="http://www.w3.org/2001/XMLSchema#dateTime"/>

</owl:DatatypeProperty>

<owl:DatatypeProperty rdf:about="#has_rule_time">

<rdfs:domain rdf:resource="#Rule"/>

<rdfs:range rdf:resource="http://www.w3.org/2001/XMLSchema#dateTime"/>

</owl:DatatypeProperty>

<owl:DatatypeProperty rdf:about="#has_gesture_name">

<rdfs:domain rdf:resource="#Gesture"/>

<rdfs:range rdf:resource="http://www.w3.org/2001/XMLSchema#string"/>

</owl:DatatypeProperty>

<owl:DatatypeProperty rdf:about="#has_gesture">

<rdfs:domain rdf:resource="#Rule"/>

<rdfs:range rdf:resource="http://www.w3.org/2001/XMLSchema#string"/>

</owl:DatatypeProperty>

<owl:Class rdf:about="#User">

<rdfs:subClassOf rdf:resource="http://www.w3.org/2002/07/owl#Thing"/>

</owl:Class>

<owl:Class rdf:about="#Gesture">

<rdfs:subClassOf rdf:resource="http://www.w3.org/2002/07/owl#Thing"/>

</owl:Class>

<owl:Class rdf:about="#Wave">

<rdfs:subClassOf rdf:resource="#Gesture"/>

</owl:Class>

<owl:Class rdf:about="#Rule">

<rdfs:subClassOf rdf:resource="http://www.w3.org/2002/07/owl#Thing"/>

</owl:Class>

<owl:Class rdf:about="#CloseCamera">

<rdfs:subClassOf rdf:resource="#Rule"/>

</owl:Class>

</rdf:RDF>

Evolution of the model

The architecture of the initial proposed model has not changed much. In general, the focus was shifted from the data that would form a gesture, to the gesture itself and what it causes . Following this idea, classes like Webcam or Data were removed and instances of the Gesture or Rule class were added.

Use of concepts

The web application creates and registers Gesture objects from Webcam-captured video streams, with the time when it was created. Gestured are continuously interrogated through SPARQL and based on the most frequent gestures in the last 10 seconds, if a certain threshold is exceeded, Rules instances are created and bound to the gesture that caused it, for future references.